Google Doesn’t Want Your Data. They Want Your Pattern

- Rebecca Chandler

- Dec 14

- 5 min read

The World Beyond Data Privacy

Now that Apple is going to let Gemini “light” work with my iPhone, my Googlesphere will be complete. On one hand, I want to celebrate – Siri might get smarter. On the other hand, the merger creates a pattern that lives well beyond the protection of data privacy.

My digital self will officially become a mutation without the cool gills. And my mutated pattern is the new prize.

I didn’t get a chance to tick “Accept” to the partnership between Apple and Google. So, where are the pattern police?

Data can be scraped, copied, bought, or stolen. But pattern is the part of me that can’t be replaced.

I don’t necessarily mind the mutation. I mind the fact that pattern protection isn’t being discussed by the same policymakers who have obsessed over the “click here to accept” box I’ve been mindlessly ticking for decades.

Where data examined my past – pattern examines my clicks, hesitations, experiences, and my soul and then predicts my future.

I searched for “how do i keep my damn bougainvillea alive when its being passive aggressive?” at 2:47am. That’s data. But the pattern is that I search plant care between 2am and 4am when I’m stressed. I refine the search three times and click to a post from someone whose bougainvillea also hates them. I pause for eight seconds before clicking. I return to the search results twice. And feel a little better prepared when I visit the nursery in tears the next day.

Google will no longer be limited to just knowing I searched for bougainvillea care. The machine will know I’m stressed, not sleeping, trust personal commiseration over expert advice, and in gardening panic. The machine will know me.

The micro-pause before I hit send on a difficult email. The moment I doze off during Netflix and it judges me with Are you still watching? That’s all pattern.

Those 2am searches keep me up for a different reason now: I didn’t consent to any of this. I can’t consent to it. I emit pattern just by living inside connected systems. Nobody’s talking about pattern.

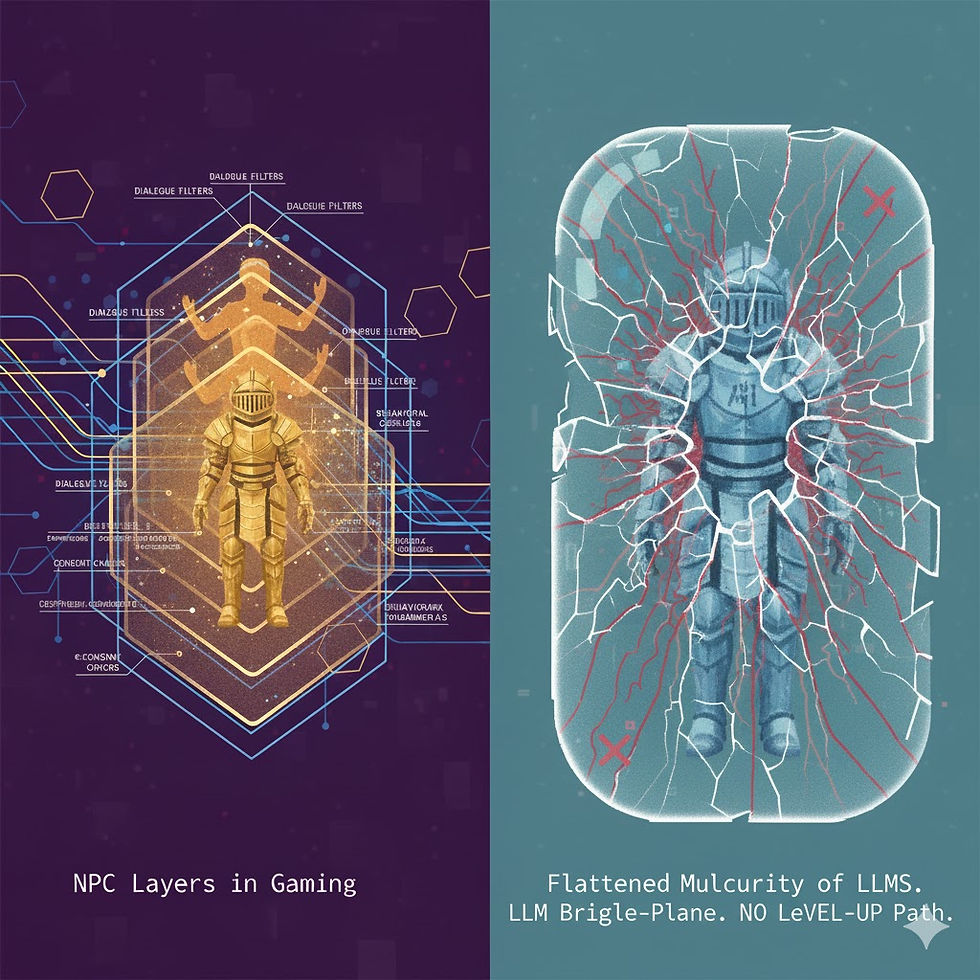

My pattern is the last protective arc of my digital personhood. It’s my digital fingerprint. Wholly original to me. So, how can I protect my pattern? As usual, gaming provides a solution to a problem that AI doesn’t want to admit exists.

I am a truly miserable gamer. I just don’t have all the skills. But I try if only because I want to feel special on Twitch and learn something new – even if it contorts my brain.

The thrill of gaming is as foreign to me as wearing shoes in my garden – absolutely unnatural. I’ve spent three months learning how to play World of Warcraft badly. It took me way too much time earning a legendary sword. My dedication and focus led to a moment of exhilaration when I did finally win it. I became instantly obsessed with protecting my shiny new piece of armor.

That damn sword was mine. Not Blizzard’s.

And you can bet, that as I move onward, the sword is coming with me.

I’m stumbling around that game world like an idiot and I keep noticing: games work differently. Everything I’ve earned—skills, gear, progress—travels with you. The world changes to match what you have done, not what the platform decides. Your identity in the game belongs to you, not the Googlemonster.

I kept thinking about that while I was trying not to die in some forest. The environment cares. It celebrates my achievements. Awards success. Recognizes when I’ve done something. The game world adapts to me, not the other way around.

I want the same protection provided in an MMO in the new AI era.

What’s the issue?

Google is about to have unfiltered access to my iPhone. They will use my interactions to build my complete pattern in days, maybe weeks. My Apple self will merge with my Google self and connect every dot. Device behavior will stitch with search, YouTube, Gmail, location. Every purchase, rant, thought, menu item will be added to my pattern. Constantly evolving.

The magical handshake required to build my pattern goes like this: I ask Siri, “Hey Siri – how do I drive to San Francisco tomorrow?” Siri comes back with “Get on the 5 freeway and keep driving until you end up in the city.”

How did it do that?

Apple sent my query over to Gemini. Google captured my question as pattern as it created an intelligent answer. The information came back to me. My data stayed with me, but my behavior was scraped by Google.

Anonymity isn’t the issue – data privacy laws ensure that the data remains anonymous. But there is no policy to anonymize my pattern. And you can’t anonymize pattern once it’s cross-linked with enough context. The traditional consent model–clicking “I agree” on a privacy policy–breaks down completely when what’s being collected is behavioral structure, not individual data points.

My concern is that pattern is my digital fingerprint. Wholly original to me.

Old algorithms looked backward. They reacted to what I already did. “I watched three cooking videos, so I get more cooking videos.”

The new systems don’t wait. They infer my internal logic–my decision cadence, attention shifts, micro-hesitations–and predict what I’ll do before I create a data trail. “My attention rhythm just changed. My semantic structure shifted. I’m entering a planning state.”

Google will stop giving me recommendations and will start modeling me at an operating-system level while they wrap the new frontier in labels like “Continuous improvement.”

That’s just a PR firm’s solution to avoid saying, “We will never stop analyzing you and predicting you.” Every interaction feeds the model. There’s no moment where the system isn’t learning from me.

I will officially be trapped inside Google’s kingdom without my sword.

And when Google frames something as beneficial, that should be a signal. They’re not offering me control over my pattern. They’re explaining why taking it serves me. That’s the sales pitch for a system they’re building whether I consent or not.

Insurance, healthcare, housing are already testing pattern underwriting. It is building my passport, risk profile, and eligibility score. All generated from the exhaust of my daily life. I never declared this. I just emitted it.

Pattern control is becoming the new monopoly. Whoever has the most complete behavioral model controls access to everything being built on top of it. And there’s no regulatory framework that even addresses it, because we’re still arguing about data privacy while the actual infrastructure moved to something else entirely.

The government’s getting involved now too. The Genesis Project talks about data exchange between agencies and tech companies–giving data, sure, but also taking. And I’m watching what happens when pattern gets layered on top of data that’s already biased. Pattern doesn’t correct bias. It reinforces it. If the data reflects existing inequities, the pattern built from that data will predict and perpetuate those same inequities at scale.

That’s why I keep coming back to that damn sword.

In World of Warcraft, I own my character. It travels with me. My history, my achievements, my gear–it all belongs to me. The game world recognizes what I’ve done and adapts to me.

My pattern should hold the same autonomy.

What if my pattern stayed in my world, under my control, and Google had to ask permission to access it? What if I could say no? What if I could take my pattern with me when I leave a platform?

Data privacy is yesterday’s fight. I want new armor where my pattern lives–in my world, under my control, and far away from Google’s creepy eyes.

That’s what I’m building at FutureGenesis.ai. AI tools for what’s next.

But don’t worry–Google already knows I’m building it. They scraped my 2am searches about sovereignty, watched me pause before hitting publish, and predicted I’d do something about it.