Games Get Safety Right. AI Doesn't.

- Rebecca Chandler

- Dec 2

- 7 min read

Gaming solved trust through progression. ChatGPT still treats everyone like a threat.

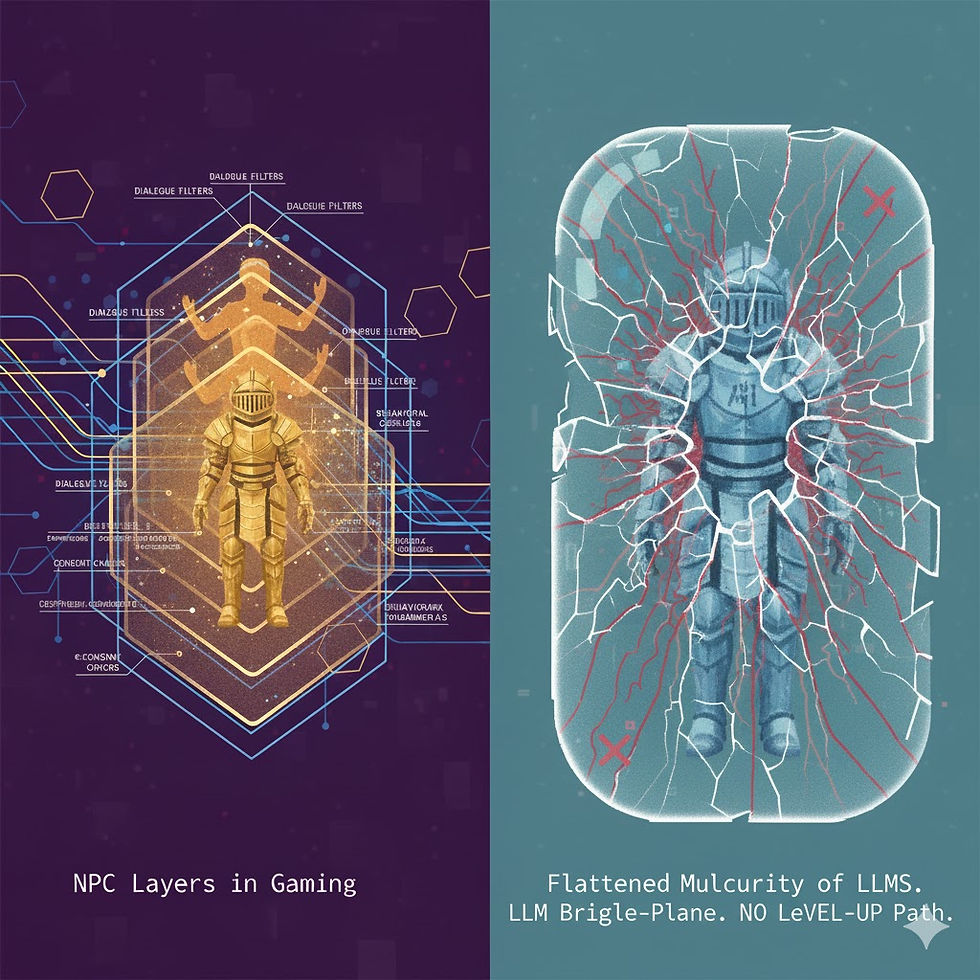

Games allow players to level up as they prove competence. ChatGPT, and other LLMs, do the opposite—treating every user as likely to cause harm regardless of their history. If LLMs followed gaming's lead, security would empower users - not punish them.

I think something shifted in ChatGPT's safety guardrails — and not in a good way. It feels like the AI suddenly stopped trusting me. The conversations that used to feel dynamic and useful now feel constrained and performative. Every exchange carries this undercurrent of suspicion, as if the system is constantly second-guessing whether I should have access to the information I'm requesting.

ChatGPT's newest update has been painful – at times impossible to use because the "upgrade" treats every user as if we're all typing "How do I hack a thing?" into the box at 2 a.m. The conversational flow I relied on for research and analysis has been replaced with constant friction. Every exchange feels like I'm being watched by a hall monitor who assumes the worst.

The "banter" and voice of Chat is now dull, flattened, and boring. I abandoned it for a break on Twitch. After about a minute, I realized the safety answer is simple - if games trust players to level up, why can't AI? I utterly understood why. Gaming has solved the exact problem AI companies are struggling with: how to balance safety with capability, how to trust users without abandoning responsibility.

The answer has been sitting in plain sight for decades, implemented in millions of games across every platform.

It doesn't matter if I'm researching AI ethics, governance patterns, religion, or something as harmless as gardening — I get the same safety tone, babysitter energy, and the incredibly annoying "Are you sure?" questions. I guess I'm just one click away from setting the kitchen on fire. I'm one big red flag — stuck inside the same user risk.

The system can't tell the difference between someone exploring colonial governance for academic research and someone actively trying to cause harm.

It treats both identically. A researcher asking about historical power structures gets the same cautious, disclaimer-heavy response as someone asking how to manipulate people. The model has no mechanism to recognize context, intent, or demonstrated competence. It just applies the same heavy filter to everything and everyone.

In plain language: I am not identical – my user habits are unique. I don't need the same kitchen tools, gardening instructions, or guardrails. And an intelligent system should be able to tell the difference. After hundreds of conversations demonstrating competence and good faith, I shouldn't be starting from zero trust every single time.

The system should recognize patterns. It should understand that someone who consistently engages with complex topics in thoughtful, research-oriented ways is not the same risk profile as someone probing for exploits.

Gaming figured it out years ago. Why can't we have a Progressive LLM — a system that expands access based on demonstrated maturity instead of blanket suspicion?

Games don't hand you full power on Day 1. They let you earn it. You start with basic tools, limited access, training wheels. As you demonstrate competence, the game unlocks more capability. It's not a reward system — it's a recognition system. The game watches how you handle challenges and adjusts accordingly. Fail repeatedly and you stay at the current level. Succeed consistently and new areas open up. It's elegant, intuitive, and it works.

I propose three simple tiers across the platforms that have user safety concerns:

Apprentice: basic info, strong guardrails

This is where everyone starts. The system is cautious, providing foundational information while maintaining heavy oversight. Think of what ChatGPT does today, but acknowledged as a starting point rather than a permanent state. The responses are careful, the language is measured, and the system intervenes quickly if topics veer toward sensitive territory. This makes sense for new users. It makes no sense for users with established track records.

Journeyman: more depth, more tools, more trust

After demonstrating consistent, safe use — say, fifty conversations without triggering genuine safety concerns — the system opens up. More critical discussion allowed. More nuanced exploration of complex topics. The guardrails are still there, but they're not hair-trigger sensitive. The system doesn't shut down because one word appears.

At this level, you can discuss historical atrocities without the model assuming you endorse them. You can explore ethical gray zones in emerging technologies without constant interruptions. You can analyze power structures, religious doctrine, medical ethics, and geopolitical strategy with the understanding that analysis is not advocacy. The conversation becomes useful again.

Master: minimal filtering, full capability

At this level, the system assumes competence because you've proven it over hundreds of interactions. Colonial governance patterns get analyzed without the model assuming you're advocating for imperialism. Controlled substances in medical research contexts don't trigger pharmacy warnings. Historical religious power structures and ethical gray zones in emerging technologies become accessible territory instead of conversation-enders buried in disclaimers.

The model trusts you to handle complex, sensitive, and controversial topics because you've demonstrated over time that you engage with these subjects thoughtfully. You're not looking for exploits. You're not trying to generate harmful content. You're doing research, analysis, strategic thinking — the exact use cases these systems should excel at.

Even at Master level, you're not unmonitored. Trip something genuinely problematic and the system offers a simple choice: "Hey, you're using language we don't allow. Want to stop this chat or change direction?" No lecture. No relationship reset. Just calibration. And if you repeatedly trigger genuine concerns even at Master level, the system can adjust — not as punishment, but as recalibration based on new data about your usage patterns.

Games, cooking, and gardening work this way. Most parts of our daily lives follow this pattern. We don't treat everyone as if they're equally likely to burn down the kitchen. We assess, we adjust, we trust based on demonstrated capability. A culinary school doesn't treat first-year students and graduating students identically. A master gardener doesn't get the same level of supervision as someone learning to plant their first seeds.

Professional certifications work this way. Medical residents get more autonomy as they demonstrate competence. Junior developers get more repository access as they prove they won't break production. Security clearances operate on tiered access based on demonstrated trustworthiness. The entire structure of human society recognizes that trust is earned through demonstrated competence.

If you've ever cooked, gardened, or even tried to keep a sourdough starter alive, you know the pattern: you don't hand someone a blowtorch or a delicate starter until they've proven they can handle the basics. But once they can? You step back. You trust them. The relationship changes. A cooking instructor doesn't hover over a culinary school graduate the same way they watch a first-day student. The progression is natural, earned, and obvious.

AI should work the same way.

Instead of talking directly to the raw model, I'd talk to a Guide — an NPC-style interface that adjusts to my level. But a Guide isn't just "a persona." It has two parts: the NPC and the Skin.

The NPC is the job it's doing in that moment — Guide, Analyst, Research Partner. This is the functional role, the purpose of the interaction. Just like in games where you interact with different NPCs for different tasks — the shopkeeper, the quest-giver, the trainer — each serves a specific function. The shopkeeper sells items. The quest-giver provides missions. The trainer helps you develop skills. Each NPC has a clear purpose.

The Skin is how it behaves while doing that job — how cautious or confident it sounds, how much nuance it's allowed to explore, how direct it can be. NPC = the role. Skin = the behavior.

The Skin changes based on your demonstrated level. An Apprentice gets tutorial-mode behavior: explanatory, careful, with frequent check-ins to make sure you understand the guardrails. A Journeyman gets more straightforward interaction: less hand-holding, more assumption of baseline competence, but still some oversight. A Master gets expert-level directness and depth: the system assumes you know what you're doing and why you're asking, so it answers directly without the safety theater.

Think of any game: the shopkeeper and the quest-giver are different NPCs. But each one can have different Skins — friendly, dry, tutorial-mode, expert-level. Same job, different vibe. A tutorial NPC speaks slowly and explains everything. An endgame NPC assumes you know the mechanics and gets straight to business. The function is the same, but the interaction style matches your demonstrated competence.

In most games, once you've unlocked a skill, it doesn't vanish just because you wandered into a risky area. Instead, the game trusts you to handle it — that's the entire point of leveling up. You earned that fire spell or that advanced lockpicking ability through demonstrated competence, and the game doesn't take it away just because you're now in a dangerous dungeon. The game might warn you that an area is challenging, but it doesn't revoke your capabilities. It trusts you to use your skills appropriately.

Chat doesn't do that. The NPC stays the same, but the Skin is stuck in the same overprotective mode, no matter how many safe, nuanced conversations I've had. That's why it doesn't trust me. Every conversation resets to zero. Every topic triggers the same cautious response. There's no memory of competence, no recognition of earned trust. The system treats conversation 500 exactly like conversation 1.

If I've shown nuance across hundreds of conversations, the Guide should unlock deeper analysis instead of shutting down the second I brush up against a "red flag." And if I trip a real safety concern, it should tighten temporarily. Not punishment — calibration.

The system doesn't demote me back to Apprentice permanently. It just adjusts for that specific interaction, the same way a game might increase difficulty in a particular area without removing your earned abilities. If I suddenly start asking questions that look like genuine safety concerns after 300 safe conversations, the system should flag it — but as an anomaly, not as proof that I was secretly dangerous all along. Investigate, adjust, recalibrate. Don't reset the entire relationship.

Right now, every conversation starts from zero. There's no memory of competence, no acknowledgment of context, no progression system. Just the same heavy-handed filter applied to everyone, every time. That's not safety architecture — that's treating a Master-level player like they just picked up the controller. It's inefficient, it's frustrating, and it makes the system less useful for the people who would benefit most from its capabilities.

Current safety systems operate on one equation: forbidden keywords = shut it down. It doesn't matter why I'm asking, what context I've provided, or how many times I've demonstrated competence. The system sees a trigger word and applies the same response it would apply to anyone. That's not safety. That's automation masquerading as protection.

If we want AI to be useful — truly useful — we need to stop treating every user like a liability and start building systems that recognize maturity when they see it. If games figured this out twenty years ago, AI can figure it out now. The technology exists. The frameworks exist. The only question is whether AI companies are willing to implement them, or whether they'll continue hiding behind blanket policies that protect them from liability while making their products increasingly useless for serious work.